As the AI hype wave (should we call it wAIve?) rises, promising us an exhilarating ride while also threatening to wipe all our professions off the face of this world - it definitely looks like we’re all in a severe need of a systemic analysis of where we’re going and if any of these promises of a brighter (or a grimmer) future are actually true.

When talking to technical people - the opinions range from “I’m not allowing AI near my code!” to “I don’t need a dev team; AI can write all of my code!” with the more balanced approach looking more like: “It’s a nice tool; it saves me time, but I’m still in control."

Personal experiences and preferences differ. And this isn’t going to change. Our quirks and annoyances are what makes us human after all. The big question in my mind is not what’s going to happen to each individual engineer’s workflow but rather how the introduction of AI-native development or vibe coding is going to impact the speed of software delivery, the stability and security of the systems we build, and ultimately the collective experience of working in IT.

Systems Thinking to the Rescue

When we apply the lens of systems thinking - every IT organization can be seen as an interplay of people, processes, and tools connected by information flows and wrapped in a thin layer of culture and semantics. No matter how advanced - AI is just another tool in this system. A mighty tool, one with far-reaching impact, but still just a tool.

So how is the introduction of AI going to impact the SDLC (software delivery lifecycle) as represented by the proverbial DevOps infinity loop? Below are three well-known balancing feedback loops that exist in the software delivery process and an attempt of a superficial analysis of AI’s impact on each one of them.

Speed of Coding vs. Stability

One of the most obvious bottlenecks in the software delivery pipeline is the balancing feedback loop between the speed of delivery and production stability. The more changes we introduce -> the less stable is the production environment -> the more pushback we get from the operations team -> the slower the change rate.

Evidently if code generation tools aren’t accompanied by appropriate guardrails further down the line - no actual improvement could be achieved. Which brings us to the next point.

Number of Tests vs. Cycle Time

AI coding assistants are famously great at generating tests— it being a fairly deterministic, routine task. But as we all know - tests come with a cost - they require resources and time to execute. The standard feedback loop here is: quality goes down -> we write more tests (or add other checks to the delivery workflow) -> this slows down the pipeline -> we reduce the number of tests -> quality goes down.

AI coding is already more resource-intensiveresource intensive than regular one. But can we trust AI to define the proper balance between cost, speed and quality?

Time To Resolution vs. Blast Radius

Common sense here says - the faster we resolve an issue, the smaller is the blast radius. And yet, if you study the history of major IT outages - in many of them the story goes like this: we had an issue, we thought we knew what the fix was, we applied the fix, and then everything went up in flames. More often than not - not allowing enough time to analyze and verify can cause a problem much more severe than the original issue we set out to resolve.

AI’s predictive nature currently always opts for the most probable solution, giving us the shortest path to result. In complex production scenarios this may lead to trouble.

Reliability-focused organizations will be very cautious in letting AI agents manage or even access their production environments. Which will leave us with a major bottleneck and create a lot of stress and frustration in the upstream.

A Very Incomplete Summary

This is a blog post, not an extensive study. We’re very early in this journey, and much more research is needed to come to any conclusions. Systems thinking, while striving for a holistic view of the systems under analysis, is still limited by our knowledge about the system components and their relationships. For complex systems - this knowledge is never complete.

From this quick overview, it looks like quite some time will pass until AI causes a significant improvement in the SDLC. Some bottlenecks are still very hard to expand, and the increased velocity will have to be balanced with additional layers of verification—until the cycle can reach a new point of equilibrium.

Another important topic I still haven’t touched upon - is how all this is going to impact the collaboration in IT organizations. As we all know - at the end of the day, it's humans and their collaboration and communication that impacts the delivery process the most. AI can definitely enhance our strategic and analytical capabilities, but on the other hand - with all the talk of one-man society, how will it impact our basic communication skills? And won’t these become the next frontier in improving the ways we work?

What do you think? What stage of AI adoption are you at? Ready to let AI push code to your production environments?

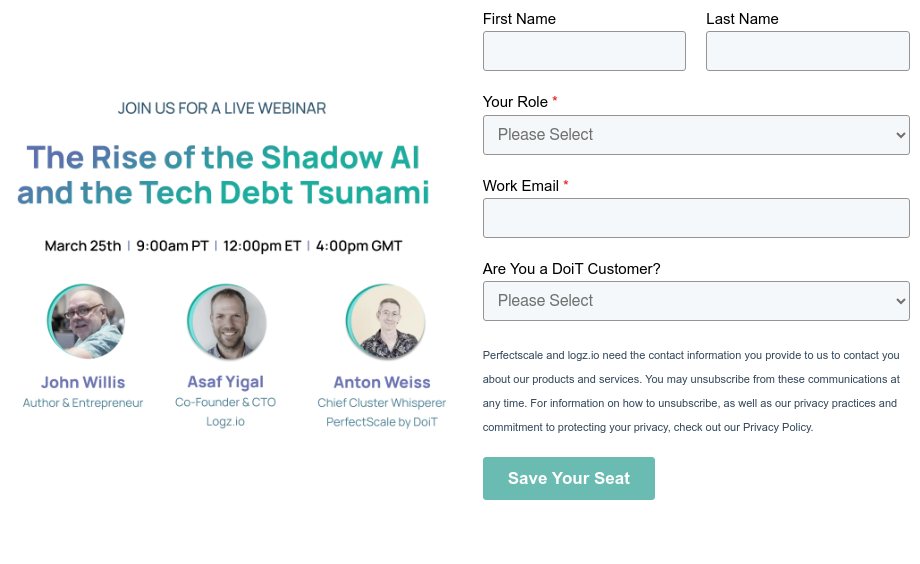

Interested to learn more? Join the DevOps thought leader John Willis, the observability expert Asaf Yigal (Logz.io) and the software delivery futurist Ant Weiss (PerfectScale) for a thought-provoking conversation on the organizational impact of AI - based on systems thinking, Dr. Deming’s Theory of Profound Knowledge and practical industry experience.

.png)